A General Framework for Tracking Multiple People from a Moving Camera

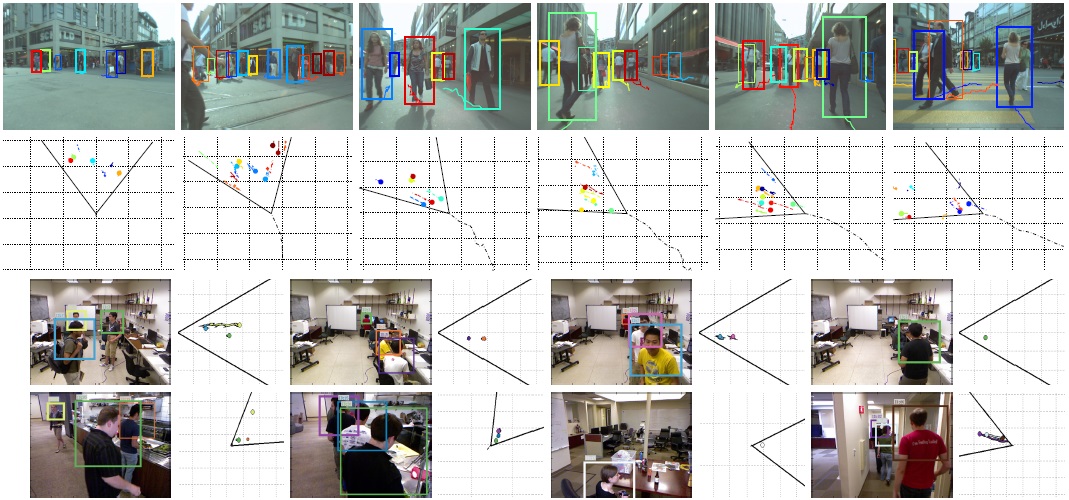

We present a general framework for tracking multiple, possibly interacting, people from a mobile vision platform. In order to determine all of the trajectories robustly and in a 3D coordinate system, we estimate both the camera's ego-motion and the people's paths within a single coherent framework. The tracking problem is framed as .nding the MAP solution of a posterior probability, and is solved using the Reversible Jump Markov Chain Monte Carlo Particle Filtering method. We evaluate our system on challenging datasets taken from moving cameras, including an outdoor street scene video dataset, as well as an indoor RGB-D dataset collected in an of.ce. Experimental evidence shows that the proposed method can robustly estimate a camera's motion from dynamic scenes and stably track people who are moving independently or interacting.

W. Choi, C. Pantofaru, S. Savarese. "A General Framework for Tracking Multiple People from a Moving Camera" in Pattern Analysis and Machine Intelligence (PAMI), 2013. [preprint][bibtex]

Related Publications

W. Choi, C. Pantofaru, S. Savarese. "Detecting and Tracking People using an RGB-D Camera via Multiple Detector Fusion" in CORP (in conjunction with ICCV), 2011. [pdf][bibtex]

W. Choi and S. Savarese. "Multiple Target Tracking in World Coordinate with Single, Minimally Calibrated Camera" in ECCV , 2010. [pdf][bibtex]

The previous project webpage can be found here. This project is sponsored by NSF AEGER (award #1052762) and Toyota. This work is in collaboration with Dr. Caroline Pantofaru (@Willow Garage).

Updates

Source Code

The source code (monocular camera version) used in the PAMI13 publication is available below. To download the code, launch an SVN client and checkout the code from the following repository (e.g. svn co link):- Version 0.5 release(August 7th, 2012): C++ code with a demo at http://mtt-umich.googlecode.com/svn/tags/release-0.5

The source code for PR2 platform will be available soon.The code is released under BSD license.

Dataset Downloads

The recommended method for downloading the data is to use wget:1) PNG + DAT tar balls

There are two RGB+D datasets we used in the PAMI paper. To download all tarballs of PR2 dataset, runwget -c -i http://cvgl.stanford.edu/data2/pr2dataset/pr2pngdat_all.txt

To download all tarballs of Umich (static) dataset, runwget -c -i http://cvgl.stanford.edu/data2/pr2dataset/umpngdat_all.txt

2) ROS Bag files

You can also download rosbag files that are compatible with ROS fuerte. To download all bag files of PR2 dataset, runwget -c -i http://cvgl.stanford.edu/data2/pr2dataset/pr2bag_all.txt

To download all bag files of Umich (static) dataset, runwget -c -i http://cvgl.stanford.edu/data2/pr2dataset/umbag_all.txt

3) Annotations

The annotations of the PR2 dataset can be downloaded here and the annotations of the UM dataset can be downloaded here.4) Matlab tools

You can download necessary matlab tools for the dataset (e.g. dat file reader, annotation reader, visualization samples, etc) here.The data is released under Creative Common license.

Last updated on 07/25/2014