Relating Things and Stuff via Object Property Interactions

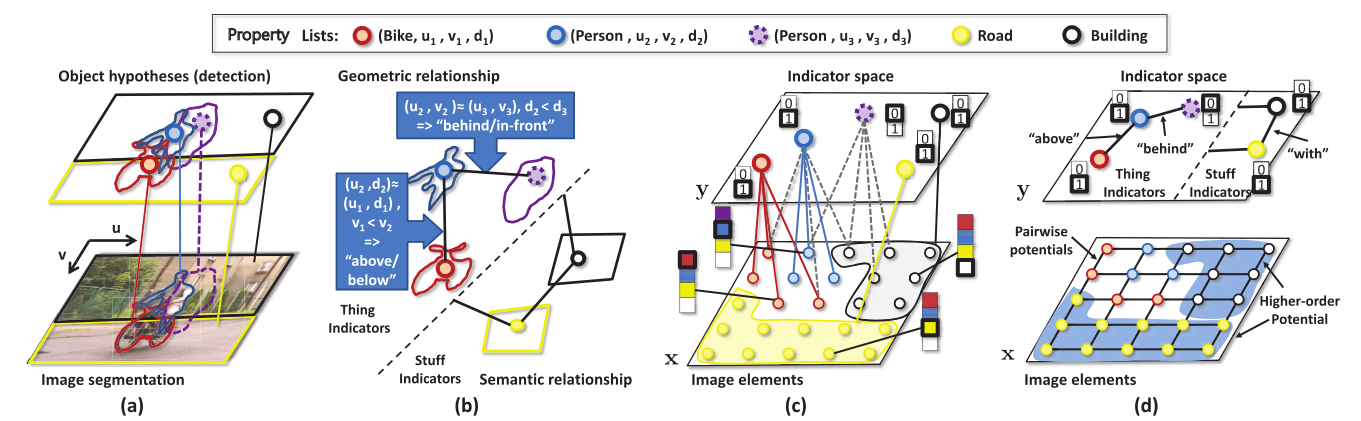

In the last few years, substantially different approaches have been adopted for segmenting and detecting “things” (object categories that have a well defined shape such as people and cars) and “stuff” (object categories which have an amorphous spatial extent such as grass and sky). While things have been typically detected by sliding window or Hough transform based methods, detection of stuff is generally formulated as a pixel or segment-wise classification problem. This paper proposes a framework for scene understanding that models both things and stuff using a common representation but still preserves their distinct nature by using a property list. This representation allows us to enforce sophisticated geometric and semantic relationships between thing and stuff categories in a single graphical model. We use the latest advances made in the field of discrete optimization to efficiently perform maximum a posteriori (MAP) inference in this model. We evaluate our method on the Stanford dataset by comparing it against state-of-the-art methods for object segmentation and detection. We also show that our method achieves competitive performances on the challenging PASCAL’09 segmentation dataset.

Video

Publications

- M. Sun*, B. Kim*, P. Kohli, S. Savarese, Relating Things and Stuff via Object Property Interactions, in Pattern Analysis and Machine Intelligence (PAMI), 2014. (* indicates equal contribution) pdf, bibtex, project

- Byung-soo Kim*, Min Sun*, Pushmeet Kohli, and Silvio Savarese, "Relating Things and Stuff by High-Order Potential Modeling." ECCV'12 worksop on Higher-Order Models and Global Constraints in Computer Vision (pdf) (bibtex) (technical report).

- Min Sun*, Byung-soo Kim*, Pushmeet Kohli, and Silvio Savarese, "Relating Things and Stuff via Object Property Interactions." TPAMI submitted.

- * indicates equal contributions.

Acknowledgements

We acknowledge the support of the Gigascale Systems Research Center and NSF CPS grant #0931474.

References

- Sun, M., Bao, S.Y., and Savarese, S. "Object detection with geometrical context feedback loop." BMVC'10.

- Ladicky, L., Russell, C., Kohli, P., and Torr, P.H. "Graph cut based inference with co-occurrence statistics. " ECCV'10.

- Ladicky, L., Sturgess, P., Alahari, K., Russell, C., Torr, P.H. "What,where & how many? combining object detectors and CRFs. " ECCV'10.

- Gould, S., Gao, T., Koller, D. "Region-based segmentation and object detection ." NIPS'09.

- Desai, C., Ramanan, D., Fowlkes, C. "Discriminative models for multi-class object layout." ICCV'09.

Contact : sunmin at umich dot edu and bsookim at umich dot edu

Last update : September 4, 2012